By Benjamin McMillan, Senior Consultant

background

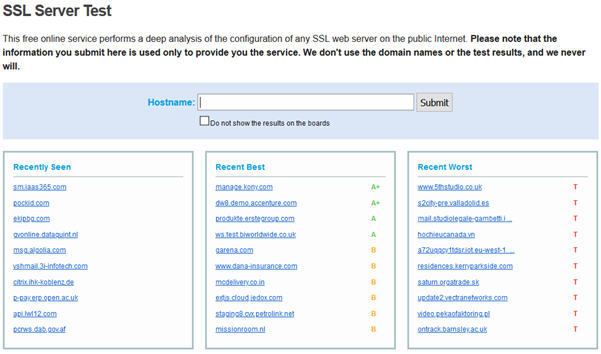

You might be familiar with the SSL Server Test provided by Qualys [1](currently hovering around a 12,500 Alexa “global internet engagement” ranking), which is widely used as a tool to audit the SSL configuration of public web servers.

In response to potential data harvesting concerns from using their service, Qualys has the following statement:

“Please note that the information you submit here is used only to provide you the service. We don’t use the domain names or the test results, and we never will.”

However, for reasons currently unknown, there is a table displayed of recently tested websites that updates on every page refresh, so while Qualys may not collect your data they essentially provide a public live feed for anyone else.

The Public live feed of recently tested websites on Qualys

I can think of two immediate issues.

- Exposure of (non-indexed) websites that while public, are not intended to be known.

- Exposure of transient websites, i.e. websites that exist publicly only for a few days (or hours) that may be in an unfinished and unsecured state, or have possibly been exposed specifically for the purpose of testing the SSL configuration.

Users are given the tick box option to not have the results publicly displayed, but it appears not many have time for that – so what would happen if someone were to watch this space?

The first thing I noticed is that there were vastly more unique websites observed during weekdays, and for the .au TLD there were vastly more seen during typical Australian business hours. Which to me suggests the site is probably used mostly by humans manually submitting tests through the web GUI and not by bots for example. There is an API as well, however it has the “publish” parameter that defaults to “off” if unset.

GUI, opt out.

API, opt in.

¯\_(ツ)_/¯

There was a total of 60,963 unique websites seen over just five days.

It’s really up to the imagination at this point. The most obvious initial search is subdomains typically considered sensitive, so for example here’s some subdomains and their hit counts.

Development:

- TEST. – 192

- DEV. – 155

- UAT. – 60

- GIT. – 26

Remote Access:

- VPN. – 201

- REMOTE. – 135

- CITRIX. – 71

Storage:

- FTP. – 53

- FS. – 22

- NAS. – 12

- BACKUP. – 9

Mail:

- OWA. – 72

- OUTLOOK. – 28

(This of course doesn’t account for variations e.g., dev1., dev2., dev3., ctx., vpn-srv., etc.)

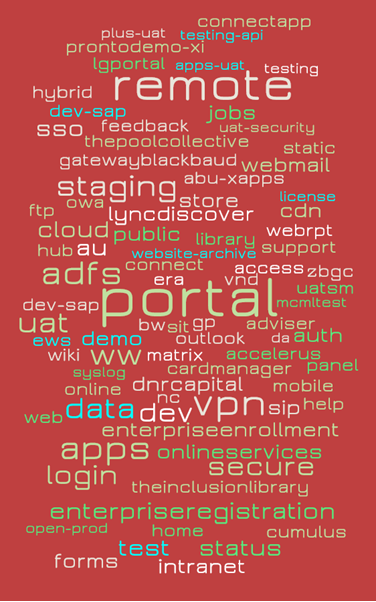

I thought to take a subset of just Australian websites, which was defined as those with the .au TLD and those geographically hosted in Australia.[2] The result was 1,453 unique websites, and this is a visual of all the subdomains (not including www. and mail.).

How about the opposite end of the domain name, such as cloud providers?

- *.amazonaws.com – 244

- *.azurewebsites.net – 112

- *.blob.core.windows.net – 14

…or this

- *.gov.au – 128

This was about as far as I was willing to probe, but I’m obviously leaving a broad attack surface unexplored. How many of these sites would have a basic auth prompt? The techniques used in tools such as WitnessMe[3] and slurp[4] also come to mind.

In the end Qualys’ SSL Server Test is still a useful tool, just remember…

References

[1] SSL Server Test provided by Qualys

https://www.ssllabs.com/ssltest/

[2] IP Location

https://iplocation.com/

[3] WitnessMe Github Page

https://github.com/byt3bl33d3r/WitnessMe

[4] slurp Github Page

https://github.com/0xbharath/slurp