In today’s digital world, phishing has taken on a new form that blends human tricks with advanced technology. It’s not just about old-fashioned deception anymore.

We’re witnessing a blend of human cunning and the power of artificial intelligence. As we navigate the internet’s complexities, it’s vital to grasp how phishing has evolved – from the tricks used by people to the looming threat posed by AI.

Come with us on this journey to uncover the secrets of phishing, understand how it’s changed, and the new risks it brings, shaking up our ways of defending against online threats.

What is Phishing?

Imagine this scenario: an email lands in your inbox, seemingly too good to be true or pressing with urgency. It might promise lottery winnings, demand immediate action, or mimic a familiar company. But beware – the hook is set. Phishing, the crafty technique employed by cyber tricksters, entices you to reveal sensitive information like passwords, credit card details, or personal data. The catch? It’s all smoke and mirrors.

How do you decipher the genuine from the deception? Scrutinise the fine print. Verify the sender’s email address – does it align with the official one? Spelling mistakes or high-pressure messages should raise alarms. Before clicking any links, hover over them to uncover their actual destinations. And keep in mind: reputable companies won’t solicit sensitive information via email.

Research shows that upwards of 95 percent of cyber breaches within the last decade have involved threat actors and adversaries utilising various types of phishing and social engineering attacks as an initial entry point into organisations of different sizes. The perspective of the malicious actor is quite simply put in the following way: “Why extend resources to research highly technical options of breaching an organisation when you can rely on human weakness without breaking a sweat”.

Phishing isn’t merely an annoyance in the digital world; it’s a lurking threat ready to ambush unsuspecting businesses. The repercussions? Monumental. Beyond the immediate loss of critical data and financial breaches, phishing ravages businesses by staining their reputation, eroding customer trust, and accruing substantial financial losses through regulatory penalties and legal battles. It disrupts day-to-day operations, leading to downtimes and productivity slumps as teams labour to contain the fallout. The aftermath of a successful phishing attack casts a lingering shadow over the stability and sustainability of the targeted organisation, extending far beyond the initial breach. In our deeply interconnected world, the impact of phishing transcends digital boundaries, leaving businesses to grapple with the consequences of falling for a single meticulously crafted deceptive email.

In the ever-evolving landscape of cyber threats, traditional phishing has given way to a new, more sinister player: AI-generated phishing attacks. Unlike their conventional counterparts, these AI-driven assaults are sophisticated, posing elevated dangers to organisations’ cyber security. With an elusive nature, they blur the lines between genuine and fraudulent communication, amplifying the threat of undetectability.

Defending against these AI-powered tactics requires a recalibration of defence mechanisms, as traditional methods often fall short against their nuanced strategies.

In this report, we delve into the perils of AI-driven phishing attacks, exploring their inherent dangers, the looming threat of being undetectable, and crucial strategies to fortify organisations against these stealthy adversaries.

The perspective of the malicious actor is quite simply put in the following way: “Why extend resources to research highly technical options of breaching an organisation when you can rely on human weakness without breaking a sweat”.

The dangers of AI driven phishing attacks

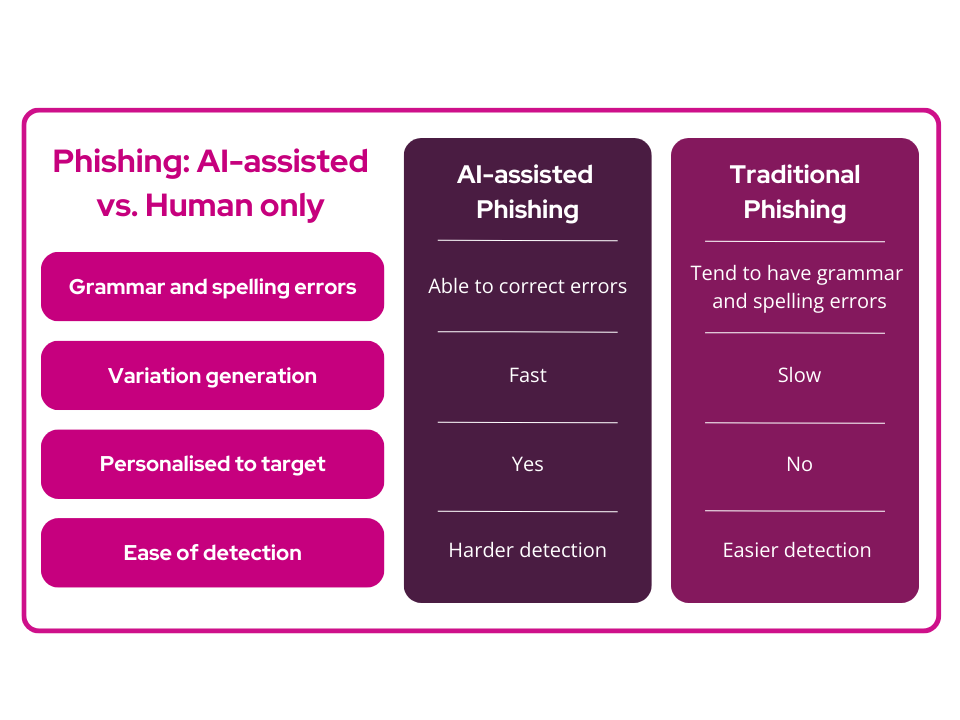

The emergence of AI-driven phishing attacks signals a significant shift in the cyber security landscape, introducing a level of sophistication and adaptability that challenges conventional defence strategies. Unlike traditional phishing tactics known for their identifiable flaws such as grammatical errors and lack of personalisation, AI-powered attacks elevate the game substantially. These advanced tools swiftly rectify spelling mistakes and grammar issues, circumventing email filters reliant on exact text matches.

Here’s where it gets intriguing. AI capabilities extend beyond mere error correction, displaying remarkable speed in generating diverse versions of phishing emails. Cyber adversaries leverage AI to traverse social media platforms, extract insights to craft highly personalised messages, pose a substantial challenge in distinguishing genuine correspondence from fraudulent emails.

What’s particularly concerning for cyber security experts is the proficiency of these AI-driven assaults in eluding traditional defence mechanisms. These tactics involve rewriting flagged emails with a professional tone, leveraging AI prowess to evade detection and optimise attackers’ time efficiency. Additionally, with AI tools combing through extensive social media data, attackers can cast a wide net, targeting a broader audience with tailored messages. The emergence of AI-generated phishing poses a formidable challenge, compelling a reevaluation of defence strategies against these intricately personalised cyber threats.

AI-driven potentially outperforms traditional phishing in various ways:

- Swift error refinement: mistakes in phishing emails like spelling errors and grammar issues are swiftly rectified

- Bypassing email filters: emails and subject lines are written to bypass traditional email filtering methods

- Rapid email variations: diverse variations of phishing emails take mere seconds to generate

- Highly personalised content: generative AI is leveraged to craft highly personalised emails by mining personalised data about their targets from social media platforms

- Adaptability against detection: emails flagged as spam are swiftly rewritten saving attackers significant time

- Scalability through data scraping: vast amounts of social media data can be scraped to create realistic messages, enabling attackers to target a broader audience with tailored content

The threat of undetectability

The evolving landscape of AI-driven phishing emails presents a pressing concern: the looming possibility of these attacks becoming exceptionally challenging for detection by both humans and AI-powered email filtering solutions. The intricate nature of AI-generated phishing emails poses significant hurdles for conventional detection methods. AI tools adeptly refine these messages, sidestepping signature-based detection and reputation checks commonly relied upon for spotting fraudulent emails.

Insights from Egress Software Technologies’ threat intelligence team shed light on the formidable challenge these emails present. Out of 1.7 million phishing emails analysed, a staggering 44.9% contained less than 100 characters, with an additional 26.5% containing less than 500 characters. This poses a substantial hurdle for detection systems, particularly those employing AI-based detectors. These detectors require a minimum threshold of characters for reliable analysis – 250 characters for assessment and at least 500 for dependable results. As a significant majority of these phishing emails fall below these requirements, an alarming 71.4% of them evade the capabilities of AI-driven email filtering solutions. This predicament leaves these emails beyond the reach of automated detection, necessitating human intervention for analysis, a resource-intensive process.

Egress also highlighted a concerning trend: human-generated phishing campaigns analysed in 2023, were reported to have implemented at least 2 layers of obfuscation that bypassed 25% of Microsoft’s defences. As these human-led campaigns evolve to include heightened levels of obfuscation, it sets a precedent for what can be expected from AI-generated emails. The likelihood of AI-generated phishing emails with a similar level of obfuscation and greater level of variation of their human counterparts poses an even greater challenge for detection systems, raising concerns about the potential undetectability of these sophisticated attacks in the future.

Other reasons why AI generated emails may be incredibly difficult to detect include:

- Creative variation: AI-generated diverse variations of phishing emails make it challenging to identify recurring patterns for detection purposes

- Adaptive evasion tactics: vulnerabilities in specific email filtering or detection systems may be exploited by AI-led evasion techniques

- Multilingual capabilities: emails in multiple languages can be generated, increasing the reach and potential targets for phishing attacks

- Targeted content: highly targeted and personalised emails are enabled by AI, incorporating specific details or references that seem authentic to the recipient and are more likely to elicit a response

- Rapid adaptation: changes in phishing detection methods can be easily adapted to by AI, evolving to bypass newly implemented security measures

Defending the organisation

Defending the organisation against the sophisticated threats posed by AI-driven phishing demands a multifaceted approach that leverages advanced technologies. Unlike traditional email filtering solutions relying on static definition libraries or domain checks, organisations must pivot towards more robust defences. Email filtering solutions that harness the power of Large Language Models (LLMs) such as Natural Language Processing (NLP) and Natural Language Understanding (NLU) offer a more proactive and nuanced defence strategy. These solutions delve beyond superficial indicators, analysing email content for linguistic markers of phishing, understanding context, intent, and anomalies in communication that might indicate potential threats.

The critical divergence between NLP/NLU-based solutions and traditional methods lies in their approach to content analysis. While conventional filters rely on rule-based checks or predefined patterns, NLP/NLU solutions comprehend the nuances and intent behind the text, enabling a more comprehensive understanding of the email content. This capability allows for the identification of subtle linguistic cues indicative of phishing attempts, providing a more effective defence against the evolving sophistication of AI-driven attacks.

To counteract the use of AI by cybercriminals, organisations need to embrace similar technologies for defence. Implementing cutting-edge AI-driven solutions empowers organisations to proactively detect and mitigate AI-generated phishing attempts, enabling a stronger security posture against these evolving threats. However, technology alone cannot guarantee absolute protection. It’s equally necessary to cultivate a culture of cyber awareness and preparedness among business users. Educating and continuously training employees to recognise and report suspicious activities or emails remains paramount. The vigilance and responsiveness of all members of an organisation form an indispensable layer of defence, augmenting the capabilities of advanced technological solutions.

Ready to fortify your organisation against evolving phishing threats? Sekuro’s Managed Security Service (MSS) acts as an extension of your security team. We provide fully managed 24/7/365 monitoring, detection, triage and response services. Secure your organisation today.

SAMMY ChUKS

Cyber Security Operations Manager, MSSP, Sekuro